Category: Blog

-

A User’s Guide to FlexAttention in FlashAttention CuTe DSL

Many variants of attention (Vaswani et al., 2017) have become popular in recent years, for reasons related to performance and model quality. These include: The PyTorch team at Meta recognized that most of these variants (including all of the above) can be unified under one elegant framework, dubbed FlexAttention (Guessous et al., 2024). This simple Go to article…

-

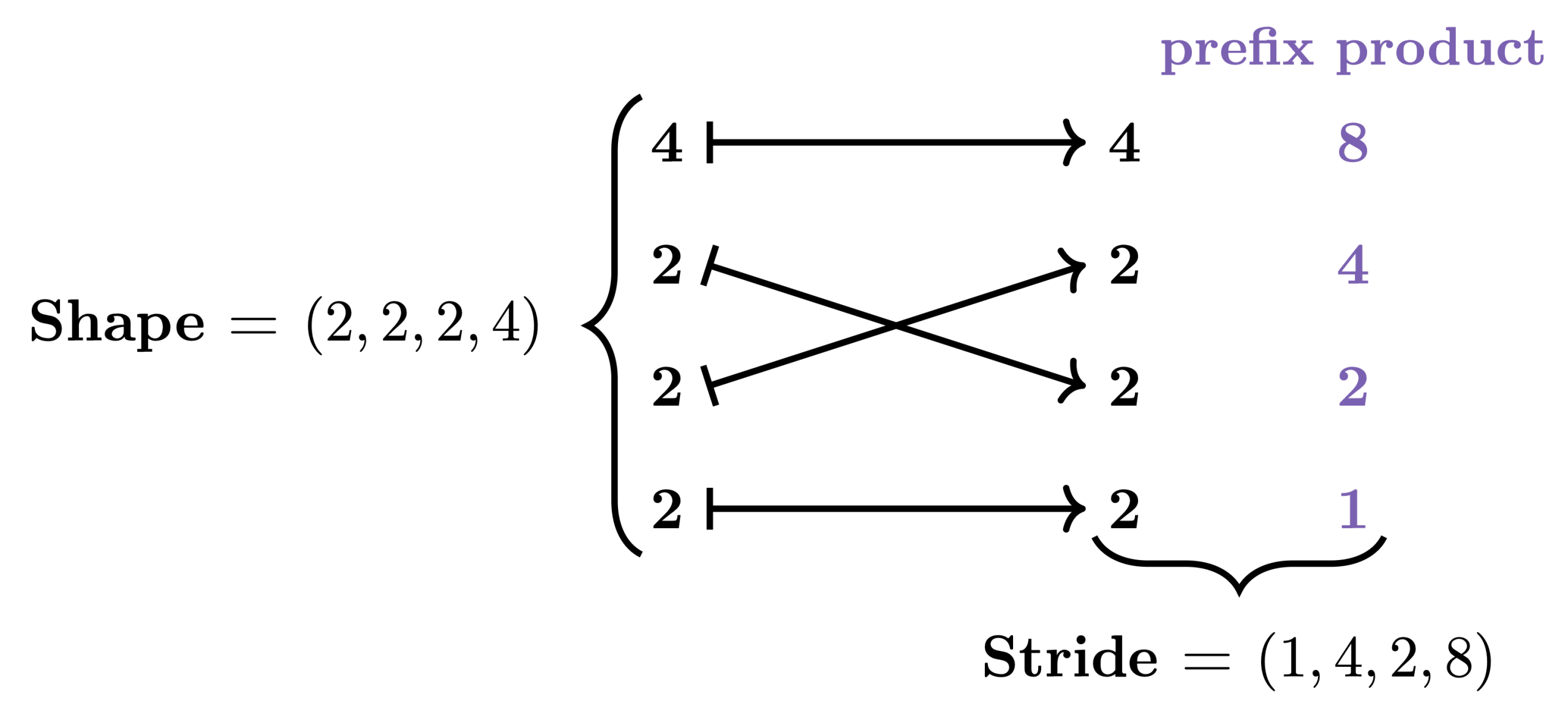

Categorical Foundations for CuTe Layouts

In GPU programming, performance depends critically on how data is stored and accessed in memory. While the data we care about is typically multi-dimensional, the GPU’s memory is fundamentally one-dimensional. This means that when we want to load, store, or otherwise manipulate data, we need to map its multi-dimensional logical coordinates to one-dimensional physical coordinates. Go to article…

-

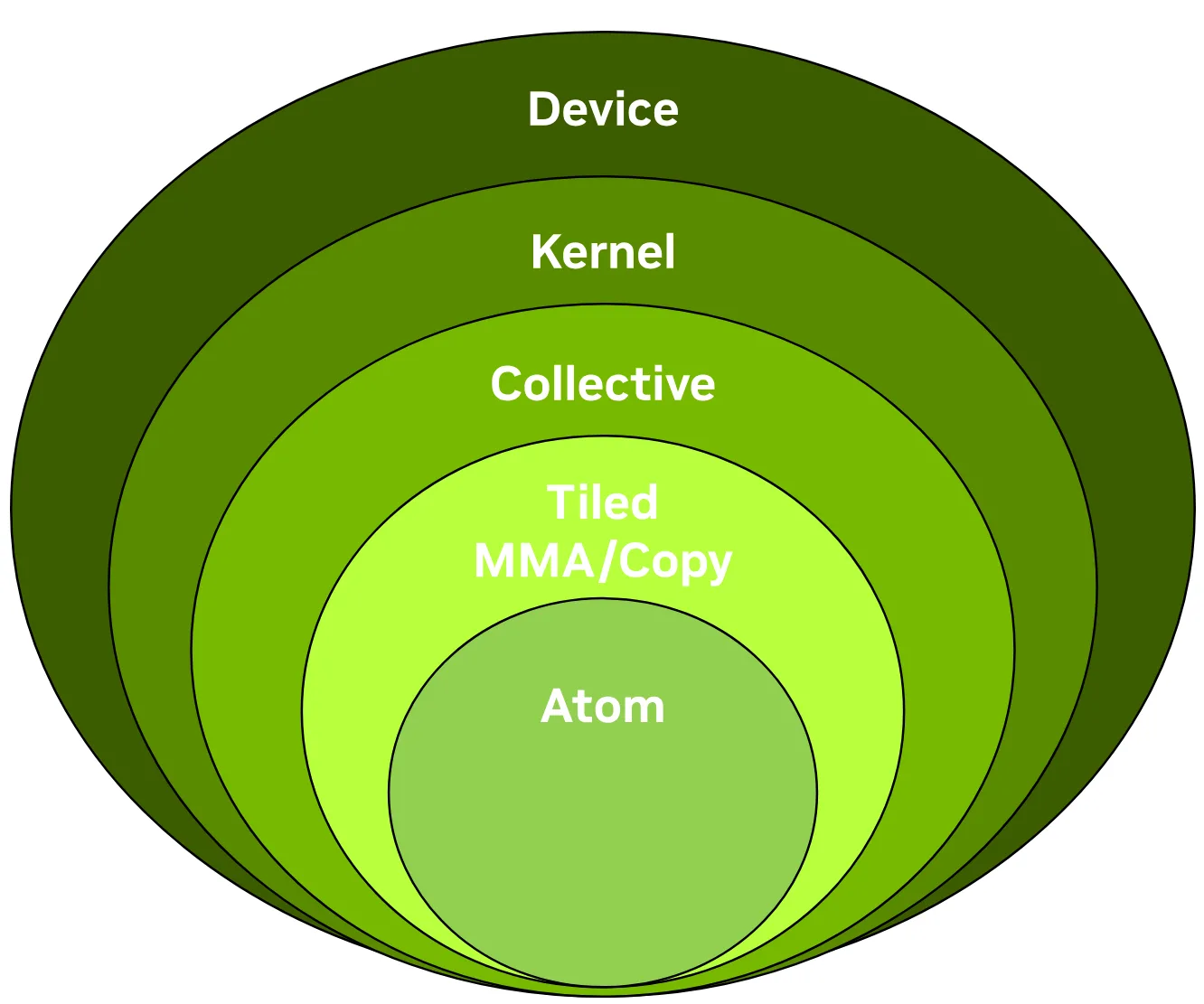

CUTLASS 3.x APIs: Orthogonal, Reusable, and Composable Abstractions for GEMM Kernel Design (External)

In this blog post presented on the NVIDIA technical blog, we give a concise introduction to the CUTLASS 3.x APIs, focusing on the collective, kernel, and device layers and the functionality of the collective builders. This post was authored in conjunction with members of the CUTLASS team. Go to article…

-

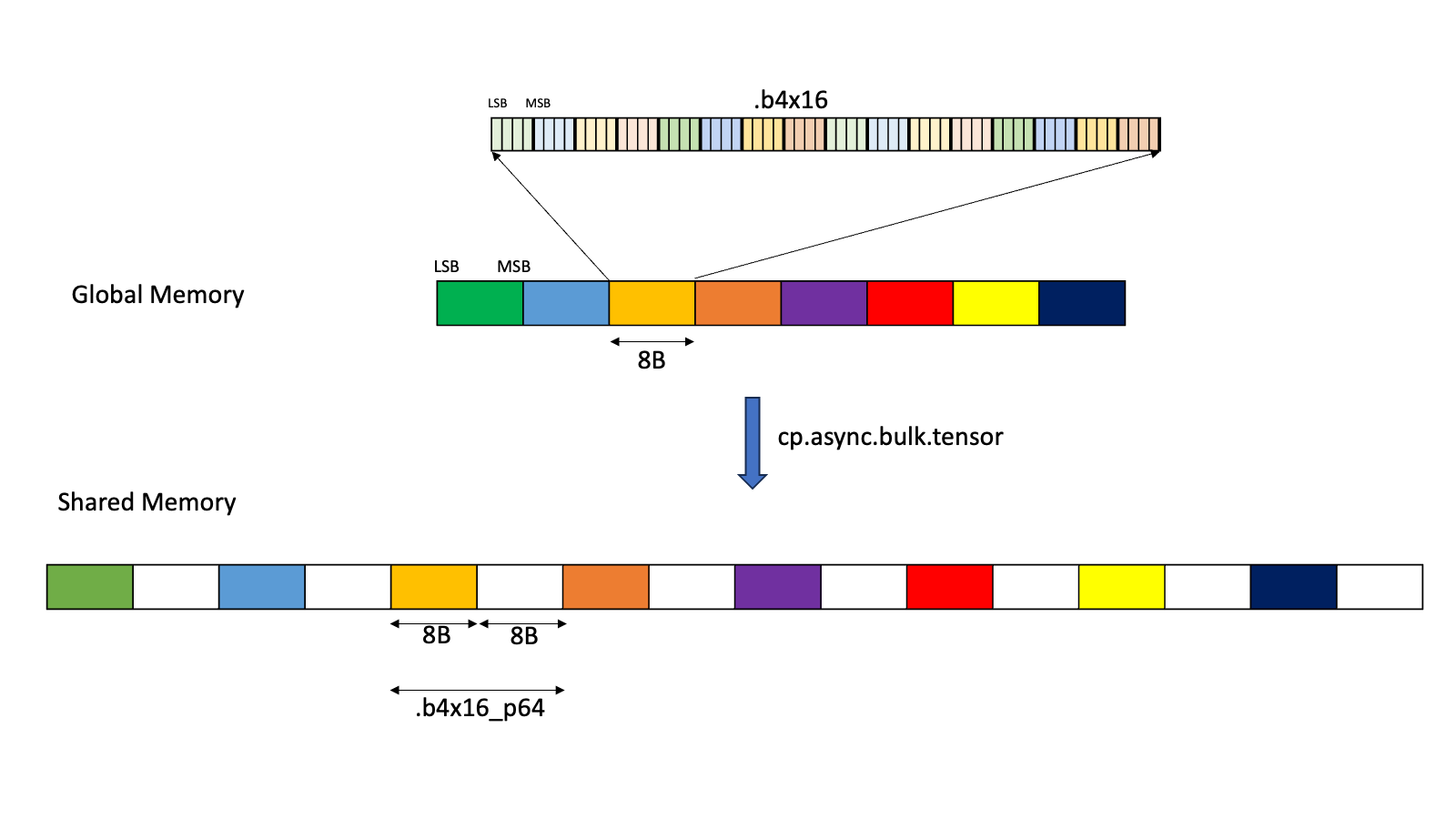

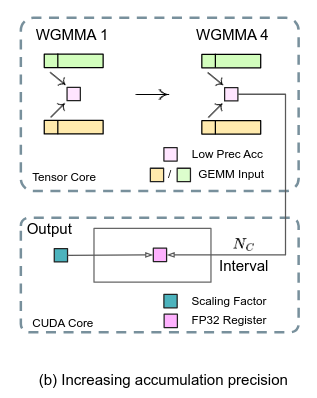

CUTLASS Tutorial: Sub-byte GEMM on NVIDIA® Blackwell GPUs

Welcome to part 3 of our series investigating GEMM on the NVIDIA Blackwell architecture. In parts 1 and 2, we looked at the Tensory Memory and 2 SM capabilities of the new Blackwell Tensor Core UMMA instructions and how to work with them in CUTLASS. In this part, we introduce low-precision computation and then discuss Go to article…

-

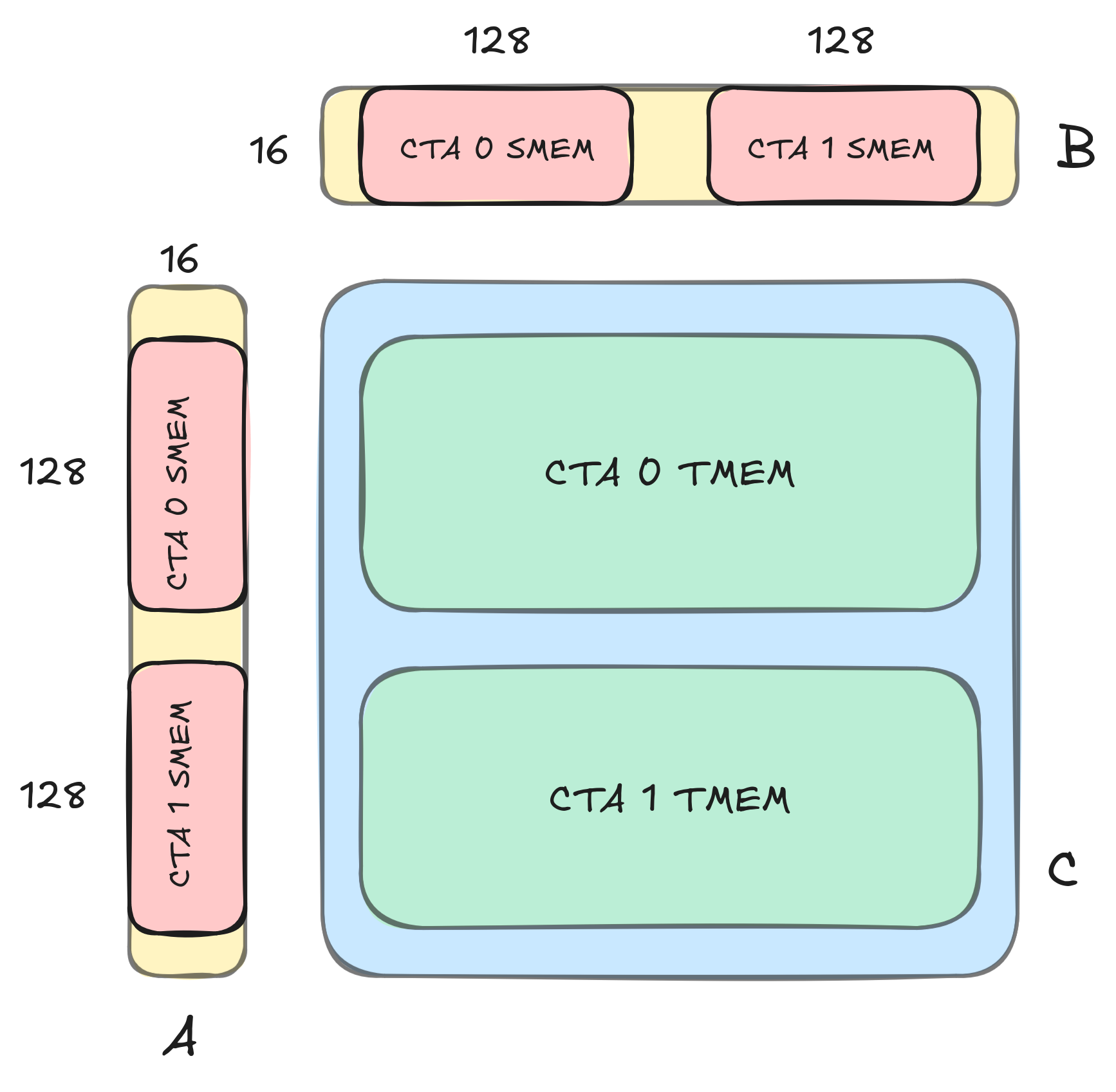

CUTLASS Tutorial: GEMM with Thread Block Clusters on NVIDIA® Blackwell GPUs

Welcome to part two of our series investigating GEMM on the NVIDIA Blackwell architecture. In part 1, we introduced some key new features available on NVIDIA Blackwell GPUs, including Tensor Memory, and went over how to write a simple CUTLASS GEMM kernel that uses the new UMMA instructions (tcgen05.mma) to target the Blackwell Tensor Cores. Go to article…

-

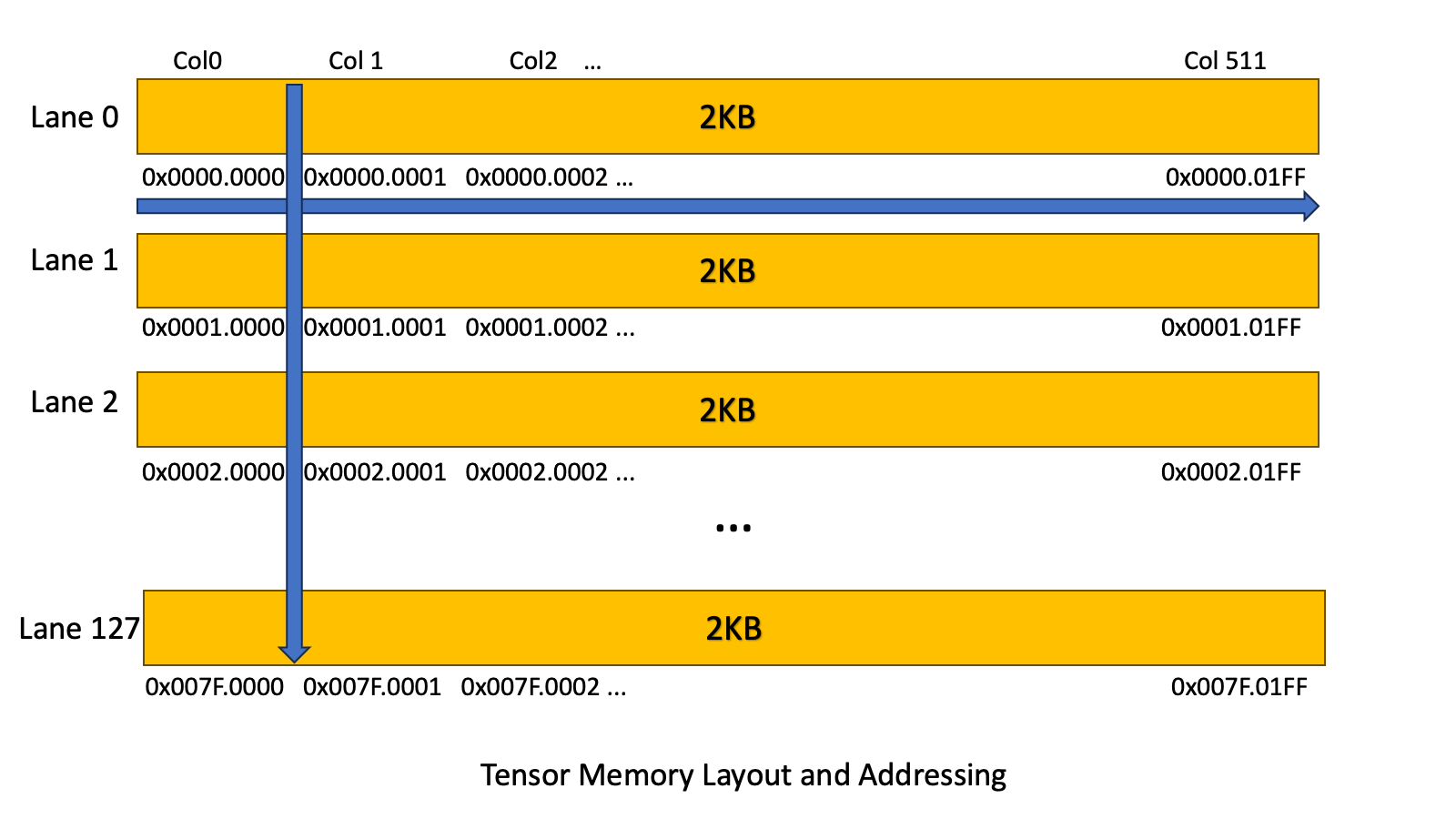

CUTLASS Tutorial: Writing GEMM Kernels Using Tensor Memory For NVIDIA® Blackwell GPUs

The NVIDIA Blackwell architecture introduces some new features that significantly change the shape of a GEMM kernel. In this series of posts, we explore the new features available on Blackwell and examine how to write CUTLASS GEMM kernels that utilize these new features by drawing on the CuTe tutorial examples. The goal of this series Go to article…

-

DeepSeek-R1 and FP8 Mixed-Precision Training

DeepSeek has shocked the world with the release of their reasoning model DeepSeek-R1. Similar to OpenAI’s o1 and Google Gemini’s Flash Thinking, the R1 model aims to improve the quality of its replies by generating a “chain of thought” before responding to a prompt. The excitement around R1 stems from it achieving parity with o1 Go to article…

-

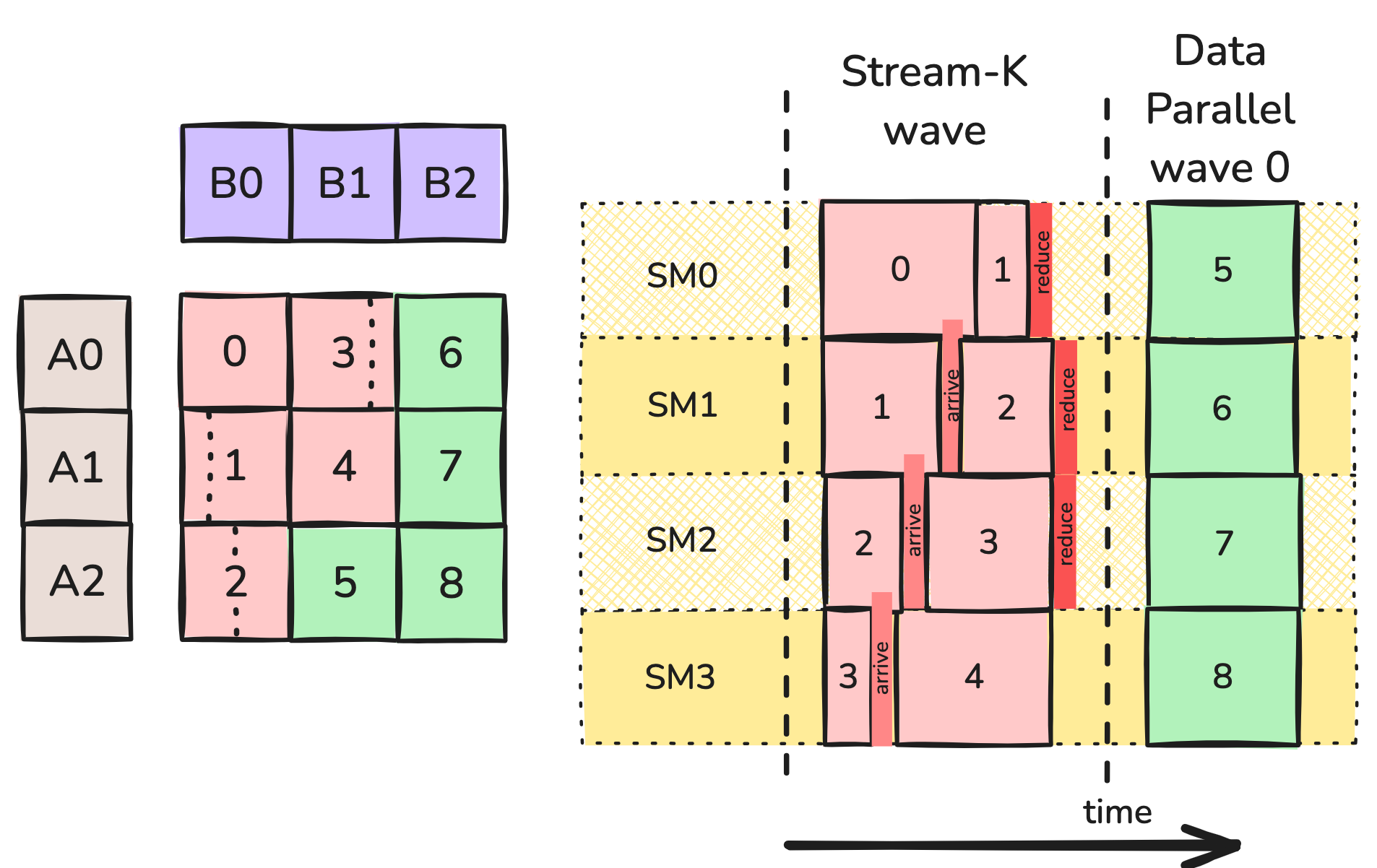

CUTLASS Tutorial: Persistent Kernels and Stream-K

Welcome to Part 3 of our tutorial series on GEMM (GEneral Matrix Multiplication). In Parts 1 and 2, we discussed GEMM at length from the perspective of a single threadblock, introducing the WGMMA matmul primitive, pipelining, and warp specialization. In this part, we will examine GEMM from the perspective of the entire grid. At this Go to article…

-

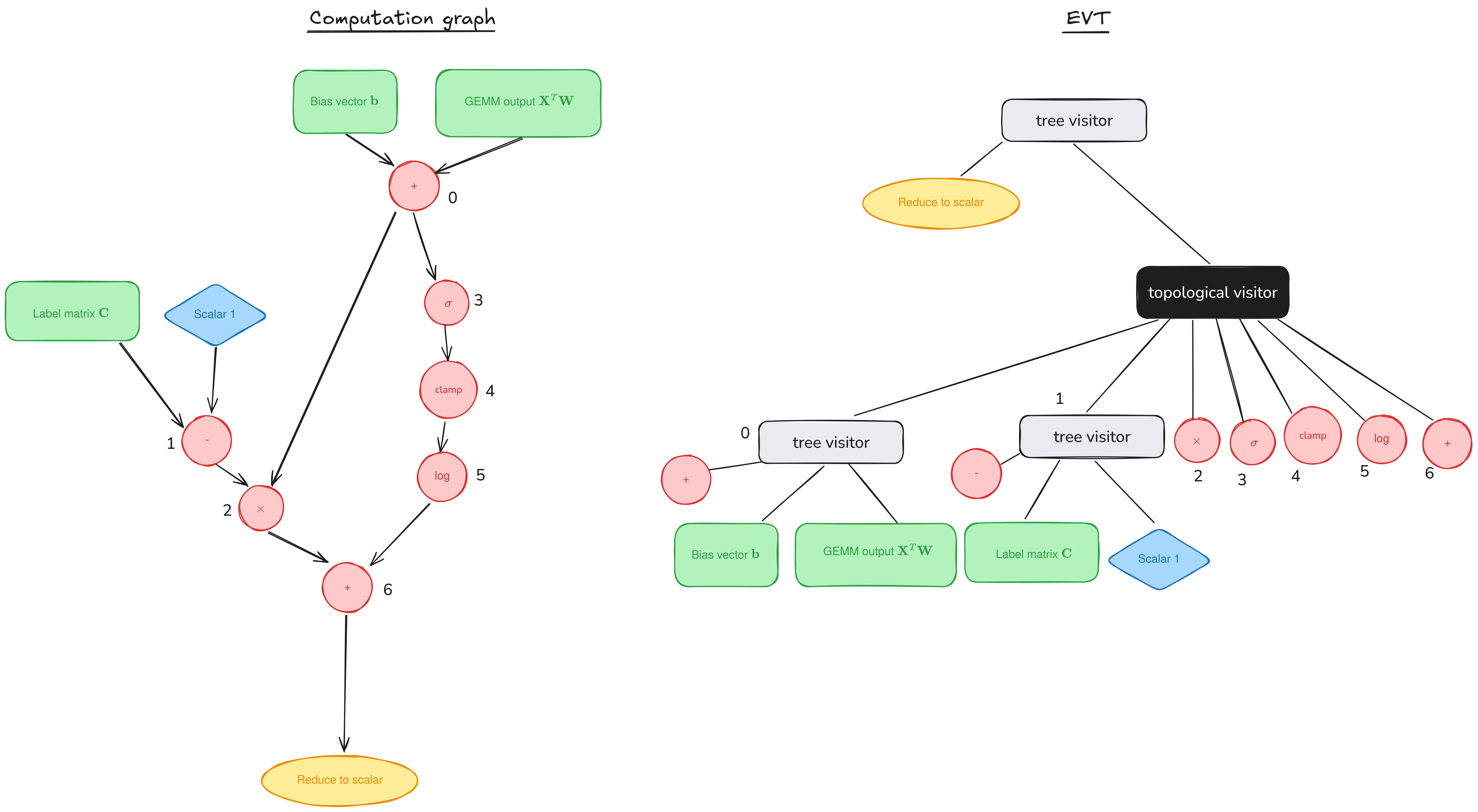

Epilogue Fusion in CUTLASS with Epilogue Visitor Trees

Welcome to a supplemental article for our tutorial series on GEMM (GEneral Matrix Multiplication). Posts in the main series (1, 2) have discussed performant implementations of GEMM on NVIDIA GPUs by looking at the mainloop, the part responsible for the actual GEMM computation. But the mainloop is only a part of the CUTLASS workload. In Go to article…

-

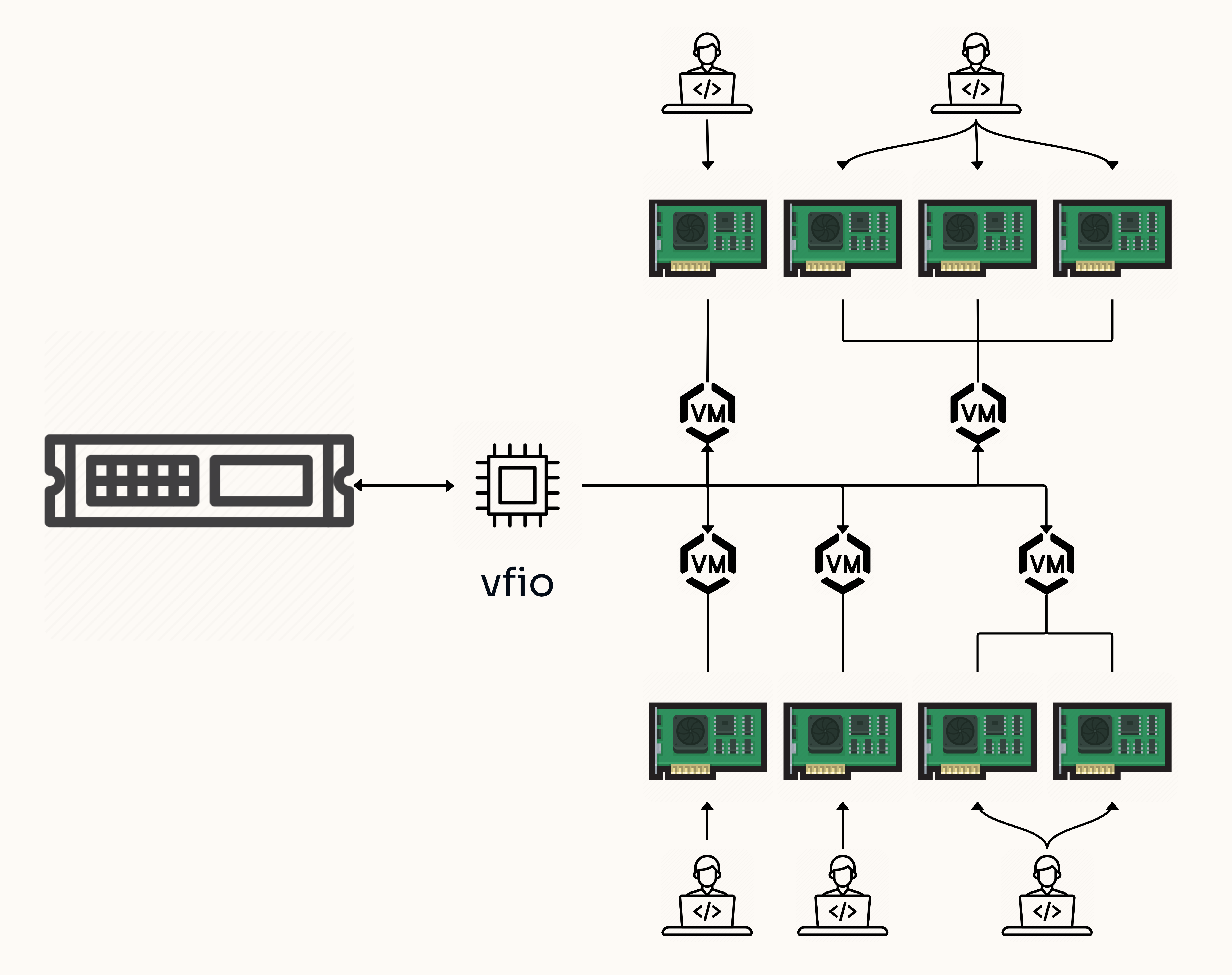

GPU passthrough on Proxmox VE 8.2

In this guide, we will walk through the steps to enable GPU passthrough and by extension PCIe passthrough on a virtual machine (VM) deployed through Proxmox. PCIe passthrough provides a path for VMs to directly access underlying PCIe hardware, in the case of this article, an Nvidia® A30 GPU. This setup is ideal for scenarios Go to article…